Spotify Figma Plugin

As a designer at Spotify, the primary visual asset you work with is the artwork that represents content in the app. There is artwork for everything from albums, to playlists, to podcasts, to audiobooks. And that list is growing. But for a long time, the process for getting artwork into a design was painfully slow and problematic. Unsatisfied with the existing tools available, I began investigating a better solution. This led to building the now widely used Spotify Figma plugin, which I've continued to maintain and improve over the years since its release.

Existing workflow

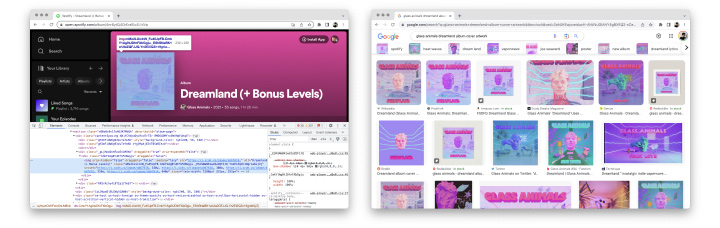

I surveyed other designers to see how they were getting artwork into designs, and responses included taking screenshots of the desktop app, taking screenshots on a phone and emailing yourself, using web inspector to download the artwork from the web player, and even Google image search.

Beyond being laborious, these methods produced poor results. Images could be low resolution or overly compressed. And worse, they could be outdated or even the wrong artwork altogether. Google image search can turn up some odd results. And once downloaded, it still involved multiple additional steps to get images into the right spot in a design.

A better solution

The ideal solution I believed would consist of three things: it would retrieve accurate and up-to-date artwork, the images would be high resolution, and the workflow would involve as few steps as possible.

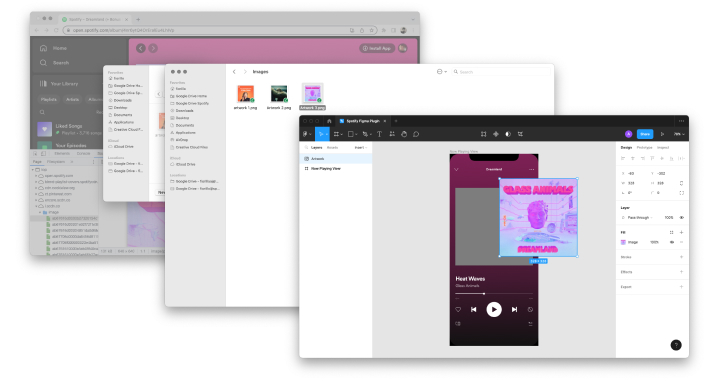

Having built Sketch and Figma plugins in the past, a Figma plugin felt like the obvious solution. It would allow for retrieving artwork without ever needing to switch apps. Also, Figma makes it really easy to build plugins, and Spotify’s web API is excellent and well-documented. So, I got started.

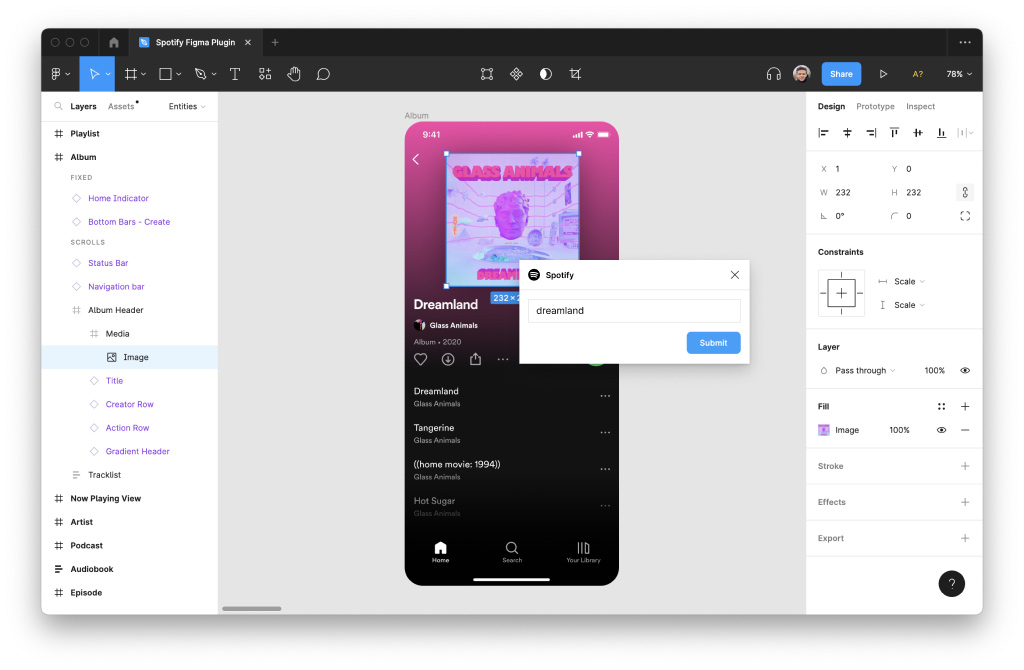

Proof of concept

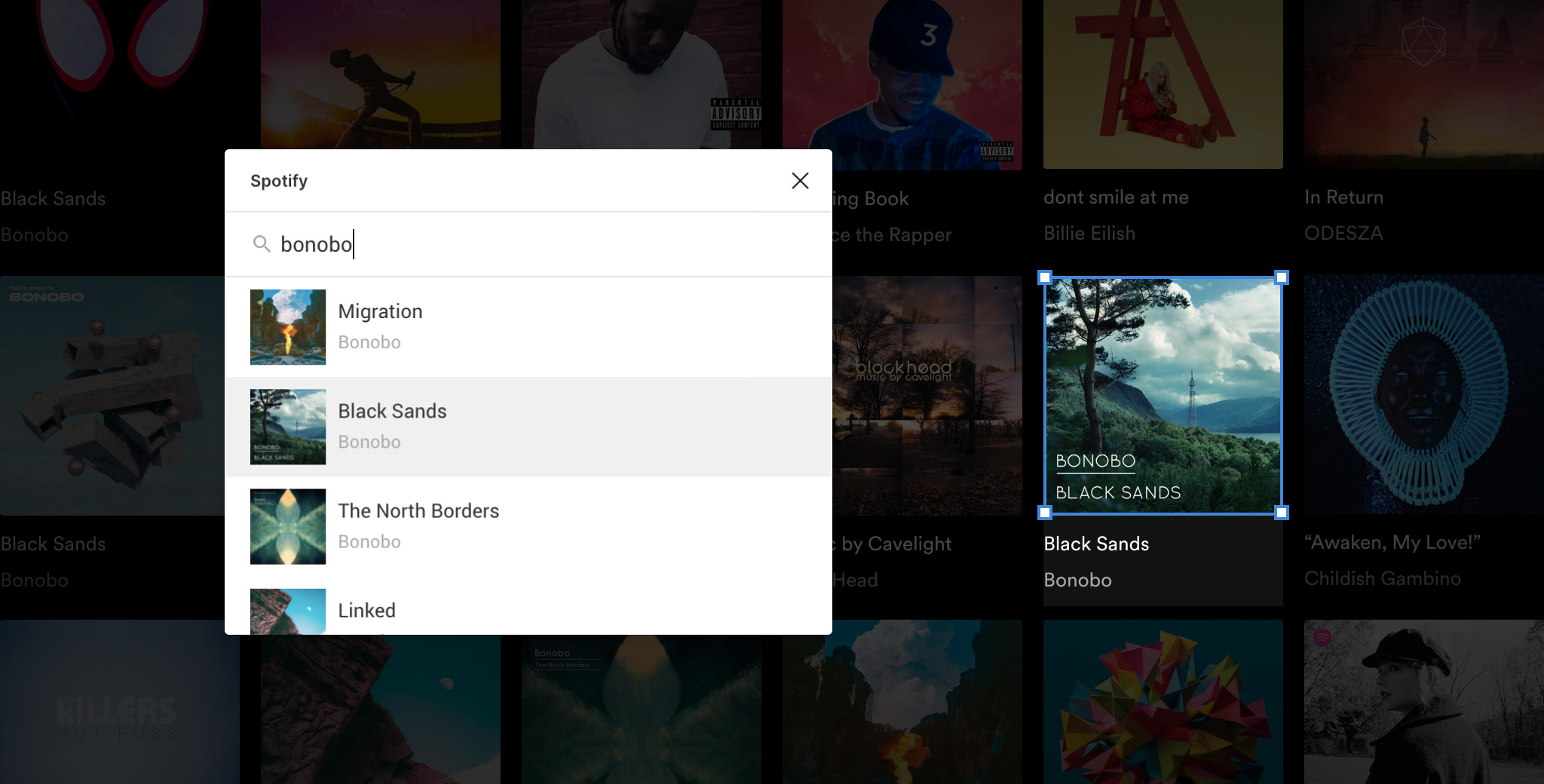

The initial version was simply a text box. It took a search term, and filled a layer with the artwork for the top result returned. To start, it only supported albums.

Even with this very limited functionality, it was clear right away that it was an improvement from the previous methods. I shared the proof of concept to gather feedback, and other designers started using it immediately in their day-to-day work. Then came requests for new features and content types.

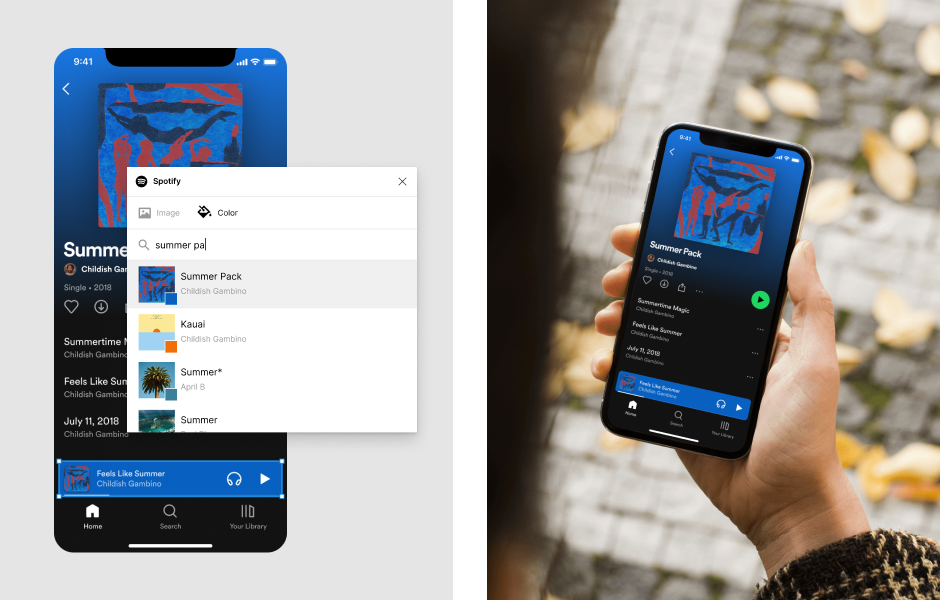

Search

The first version worked, but its limitations grew more apparent the more people used it. There was no indication of what exactly you were going to get before filling a layer, and it was easy to mistype and get the wrong album. And if there were multiple albums with the similar names, there was no way to specify which to use. The plugin needed a search interface.

I looked to other applications for examples of what I thought made a great search experience. Two that had a lot of inspiration to offer were Chrome’s location bar and Spotlight in macOS.

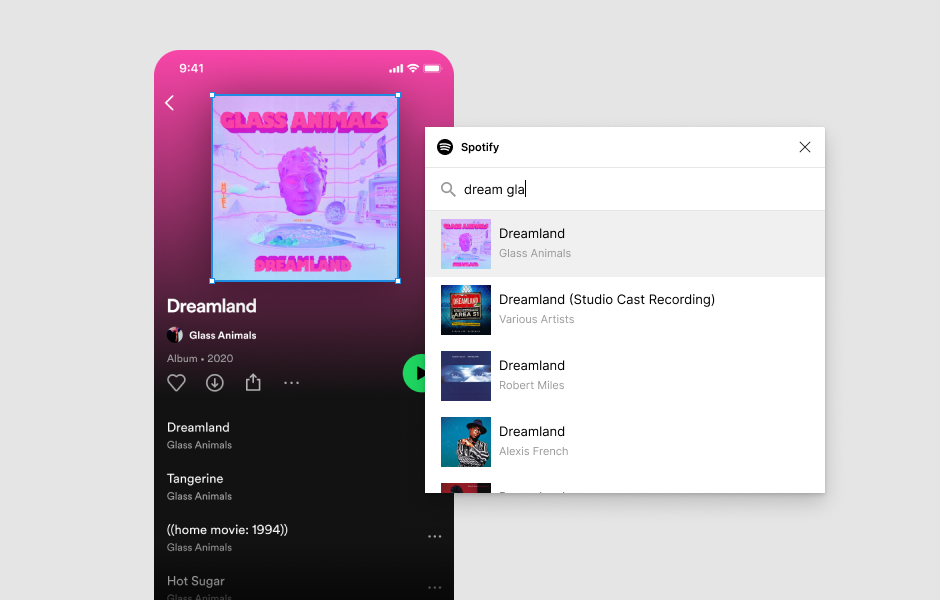

What made these great was their quick display of relevant results as you typed, which only required partial input, and their easy keyboard navigability. I took the elements that worked well from these and applied them to the plugin. The result was a dramatic improvement.

Albums results appear instantly as you type. You can search by name or by artist, or even both. For example, if searching "dreamland" or "glass" doesn't immediately get you Dreamland by Glass Animals, you could enter a combination like "dream glass" to get exactly what you are looking for.

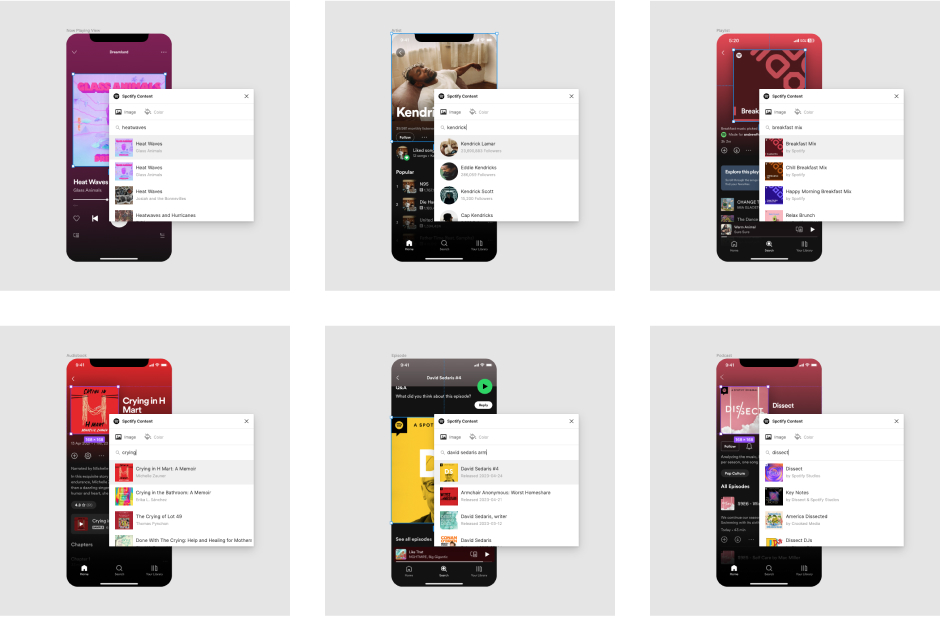

Expansion

Now that the fundamental functionality was solid, the next order of business was extending the plugin to cover all content types. Luckily, Spotify’s web API provides all of this. In addition to albums, support was added for artists, tracks, playlists, podcasts, podcast episodes, and audiobook covers.

Color extraction

Another common visual element in the app that Spotify designers work with is extracted color. Color extraction allows us to programmatically sample colors from artwork, which we use in a variety of ways across our platforms to create a more expressive experience.

But working with extracted color in a design was not easy. Sure, you could use the color picking tool to pull something believable and nice looking, but this was not the same as what was in the product. Many designers did just this, but it led to inaccurate, overly-optimistic design choices that often only handled ideal cases. A better solution would be to somehow get the same extracted color the app uses.

However, the state of color extraction in the product had grown messy and complex. Different platforms, and even features within platforms had implemented their own extraction algorithms. It became impossible to maintain consistency across Spotify's many platforms. This also meant there was no clear best source to get a color for the purposes of design.

Luckily, this growing issue did not go unnoticed, and a project was prioritized to create a centralized color extraction service the various platforms could pull the same colors from. This was great news, since it meant there was a clear best source for colors, and the plugin could also hook into this service. I worked with the team to figure out how to connect the two. For every content type, the plugin now allows you to fill a layer with either an image or the extracted color.

Video

A relatively recent Figma feature added, and one I'm particularly excited about, is video fills. It was also added to plugin API, which opened up new possibilities. There was a frequently requested content type I was unable to support up until this point called Canvas. Canvas videos are short animated clips that artists create to display alongside many of the songs on Spotify, which add another dimension of expression.

For the designers that worked with them, getting the assets into designs and prototypes was a huge pain point. A common solution was screen recording the app playing on a mobile device, transferring the video to a computer, converting it to a GIF, and dragging that into Figma. Some prototypes could have dozens of them, and populating them all could take upwards of an hour. With the addition of video fills, these videos could be made available in the plugin, and that hour task can now be done in under a minute.

Process and results

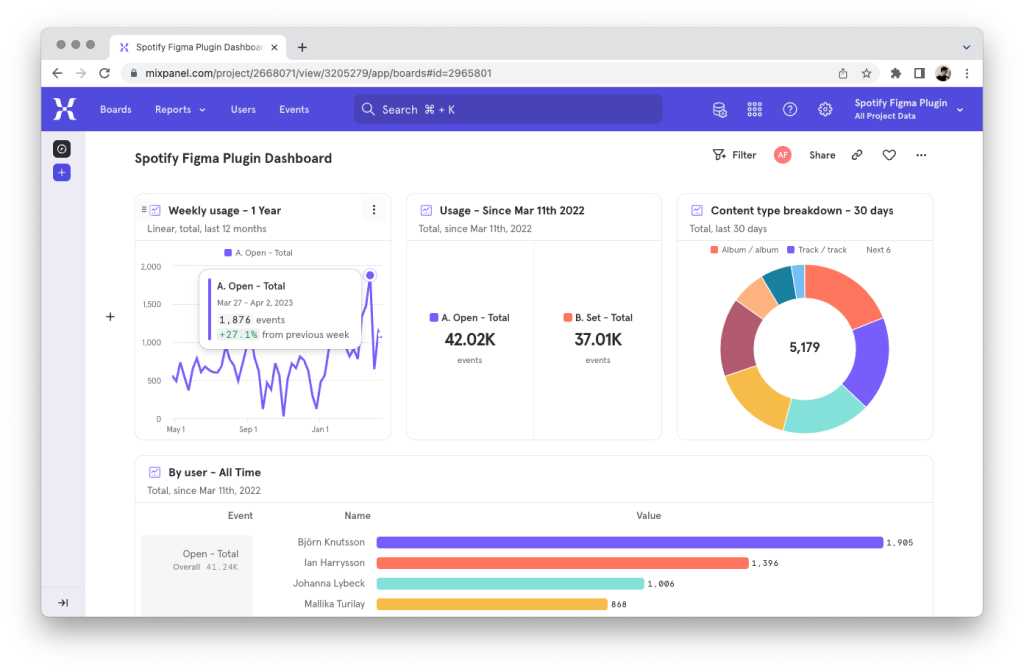

From the beginning, I approached the project as many others at Spotify. I used qualitative research, in the form of surveys and user interviews, to guide which improvements and features to explore, and implemented analytics to understand how it is used. Mixpanel is used to track performance.

Today the plugin is used by over 400 employees, and has been run over 40,000 times over the last 12 months.